Proposed Legal Reforms For Expanding Liability

April 18, 2025 by Mohr MarketingIntroduction To Online Platforms And Liability

Online platforms have become integral to modern communication, commerce, and social interaction. They serve as the backbone of digital society, enabling users to share information, engage in commerce, and connect with others across the globe. However, the rapid proliferation of content published on these platforms has raised significant concerns about harmful material, such as hate speech, misinformation, and illicit activities.

This has brought the issue of liability into sharp focus as governments, regulatory bodies, and the public grapple with questions of accountability. Traditional legal frameworks, which were not designed with the digital age in mind, are being tested and reshaped to address these challenges. The debate centers around how much responsibility online platforms should bear for the content hosted on their sites and how much they should actively monitor and intervene in user-generated content.

Current Legal Framework And Limitations

The current legal framework governing online platforms’ liability for harmful content primarily hinges on the principles outlined in the Communications Decency Act (CDA) in the United States, particularly Section 230. This legislation grants platforms immunity from being treated as the publisher or speaker of third-party content, thus shielding them from liability related to user-generated content. Similar protections exist in other jurisdictions, such as the European Union’s E-Commerce Directive, which provides a safe harbor if platforms act expeditiously to remove harmful content once notified.

However, these frameworks face criticism for not adequately holding platforms accountable for the harmful or illegal content they facilitate. Limitations arise as the rapid growth of digital content and evolving forms of harm online outpace legislative adaptations, leading to calls for reform. Critics argue these frameworks lack precise definitions and sufficient mechanisms to compel proactive monitoring and management of harmful content.

Case Studies Highlighting Consequences Of Harmful Content

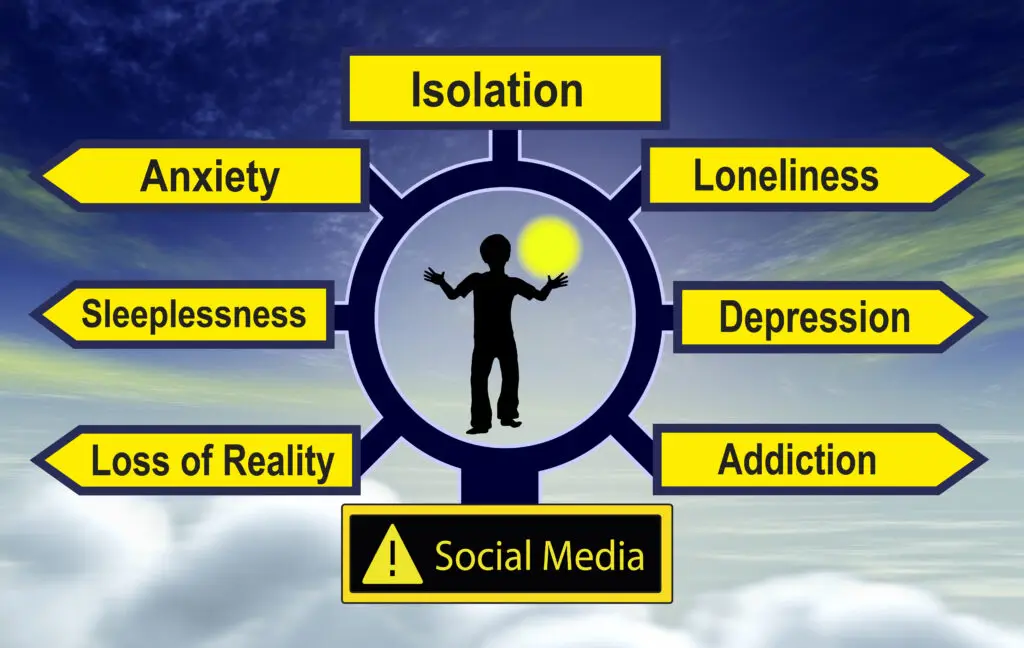

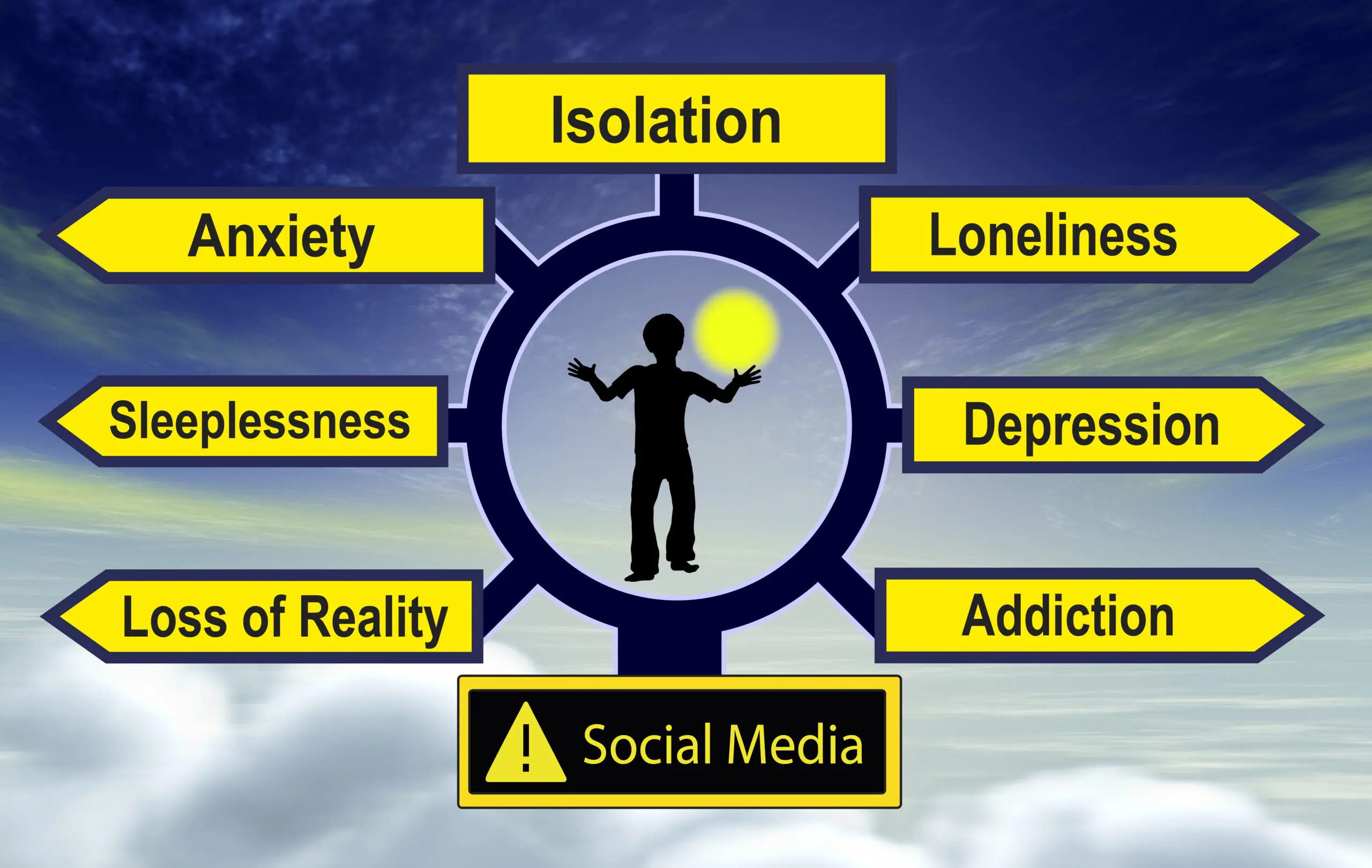

Several high-profile cases illustrate the consequences of harmful content on online platforms. One such example is the tragic case of a teenager who took her own life after being exposed to damaging and distressing images on a social media platform. This incident highlighted how algorithms can inadvertently promote content that is detrimental to mental health, sparking public outcry and legal scrutiny.

Another case involved the spread of misinformation about health treatments, which led to significant public harm during a health crisis. This misinformation was rapidly disseminated across multiple platforms, resulting in people eschewing scientifically proven treatments in favor of dangerous alternatives. Additionally, there was an incident involving live-streamed violence, where the platform’s delay in response allowed the content to proliferate, causing widespread social alarm and distress.

These examples underline the urgent need for robust regulatory frameworks to mitigate the impact of harmful content online.

Proposed Legal Reforms For Expanding Liability

Proposed legal reforms aimed at expanding liability for online platforms regarding harmful content focus on increasing accountability and ensuring safer digital environments. These reforms suggest that platforms implement more rigorous content moderation practices and assume greater responsibility for user-generated content. Legal frameworks could mandate the adoption of advanced detection technologies and algorithms to proactively identify and mitigate harmful content.

Additionally, reform proposals may include the introduction of clearer guidelines and definitions of harmful content, compelling platforms to swiftly remove or restrict access to such material. Legislative changes could also impose stricter penalties for non-compliance to incentivize diligent oversight. Moreover, reforms might consider the establishment of independent oversight bodies to monitor platform practices and ensure transparency in content management. Such measures aim to protect user expression while minimizing the potential spread of harmful online content.

Challenges And Controversies Surrounding Liability Expansion

Expanding liability for online platforms concerning harmful content presents numerous challenges and controversies. One primary concern is the potential stifling of free expression. Increased liability pressures platforms to over-censor content to avoid legal repercussions, potentially suppressing legitimate speech and stifling diverse viewpoints. Additionally, the global nature of the Internet complicates the enforcement of national laws, creating jurisdictional challenges and raising conflicts with international norms.

Platforms may face difficulties in effectively moderating vast amounts of content, leading to inconsistent policy application. The growth of artificial intelligence in content moderation brings further issues of bias and errors, which can exacerbate the problem. Furthermore, smaller platforms may struggle with compliance costs, potentially leading to reduced competition and consolidation within the industry. Balancing the protection of users from harm with the preservation of a free and open internet remains a deeply contentious issue.

Potential Impacts On Online Platforms And Users

Expanding liability for online platforms regarding harmful content could significantly impact both platforms and users. Platforms might face heightened scrutiny and increased operational costs as they invest in more robust content moderation technologies and teams to comply with stricter regulations. This could lead to more conservative approaches in content management, potentially resulting in over-censorship as platforms aim to mitigate risks. Users might experience reduced access to diverse viewpoints and conversations as platforms err on caution.

Additionally, smaller platforms could struggle with compliance due to limited resources, potentially stifling innovation and competition. However, increased liability could enhance user safety and trust by holding platforms accountable for harmful content. This might encourage a more respectful online environment by deterring the spread of misinformation and toxic behavior. The balance between free expression and responsible moderation remains a critical consideration.

Click the link below, learn more about us, and book a call.

https://calendly.com/mohrmarketing

For more information about our lead generation programs, contact us at te**@******tg.com.

CONTACT US FOR A QUOTE. CALL 866-695-9058 OR USE OUR REQUEST A QUOTE FORM.

Susan Mohr

Mohr Marketing, LLC

CEO and Founder

Recent Posts

- Why “Rideshare” Leads Are Failing Most Law Firms

- Depo-Provera Meningioma Compliance: Mohr Marketing Launch

- Joint Ad Program Combats Hair Relaxer Vendor Fatigue

Categories

- AI and Lead Generation

- Business Financing

- Call Verified MVA Leads

- Car Accident Help

- Car Accident Settlements

- Claimant Funding

- Compliance Program

- Geotargeting

- Google Maps Ranking

- Healthcare Practice Growth

- Law Firm Growth

- Law Office Operations

- Lead Generation

- Lead Generation For Attorneys

- Lead Generation For Chiropractors

- Lead Generation For Criminal Attorneys

- Lead Generation For D&A Treatment Centers

- Lead Generation For DUI Attorneys

- Lead Generation For Eye Doctors

- Lead Generation For Family Law Practices

- Lead Generation For PI Law Firms

- Lead Generation For Plastic Surgeons

- Leads For Healthcare Professionals

- Leads For Insurance Industry

- Legal Leads

- Legal Marketing

- Legal Updates

- Mass Tort Leads

- Medicare and Medicaid Leads

- Merchant Funding Leads

- Online Marketing Strategies

- Pre-Settlement Funding

- Signed MVA Cases

- Tort Updates

- Truck Accident Settlements

- Web Design

Archives

Copyright © 1994-2025 Mohr Marketing, LLC. All Rights Reserved.